In this moment I'm taking advantage of the Christmass truce and listening some good music. As a Paul Simon's fan from the very beggining, one of the titles that comes often on my playlist is "Mother and Child Reunion". It gave me the idea of writing this blog ticket on another kind of reunion, perhaps less dramatic, but not an easy one neither: the one betwenn Java and Docker or, more generally, Linux cgroups.

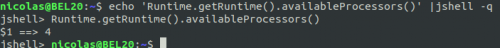

Historically speaking, Java was not at all a good citizen of the Linux cgroups world. When ran in a container like Docker, Java 9 and older simply used to ignore the container's resource allocation settings and behaved as if it was the only consumer, trying to use all the available processors, memory, etc. In order to make visible this behaviour, we'll be using JShell, the Java CLI (Command Line Interface) tool that is available for Java 9 and higher.For example, the following code:

displays the number of the processors currently available. This command is executed at the OS level, not in a docker container and, on my machine, it results in 4 cores, since the laptop I'm using, a Dell XPS 13 with Ubuntu 18.04 LTS, has a 4-cores Intel ® I7 7560U CPU at 2.40 GHz.

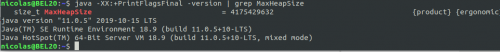

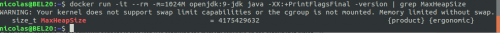

If we want to see the amount of the memory available to the JVM, we can execute the following command:

Here, the value of the parameter MaxHeapSize is 4175429632, i.e. a little less then 4 GB. Given that I didn't limited the MaxHeapSize parameter via the -Xmx argument, the JVM allocates by default a maximum of a quarter of the total available memory. And since my laptop has 16GB of RAM (15.6 after subtracting the memory used by the graphic board Intel® Iris Plus Graphics 640 (Kaby Lake GT3e), the result is as expected.

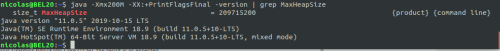

Now, if I want to limit to 200 MB the maximum heap size, as follows:

The result is again as expected, 209715200 i.e. 200*1024².

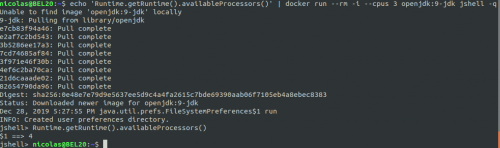

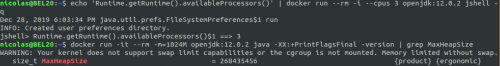

All these commands have been executed outside the container. Now, let's try to see what happens when executing them with Java 9 running in a docker container:

As we can see, the result is still 4 cores despite the fact that the docker container runs with only 3 cores ! With no repect of the number of cores available is the container in which it runs, Java thinks that 4 cores are available and it tries to use them all.

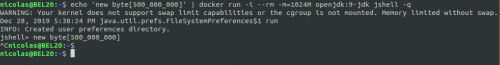

Now, let's try to limit the memory to 1GB:

As expected, Java 9 doesn't honour the memory allocation constraint set on the docker container and reports 4GB of memory when only 1GB is available. What obviously happens is that Java 9 is confusing the memory available on the container in which it is running with the one of the host machine running the docker container !

But what if we ask the JVM to allocate a byte array that doesn't fit in the total memory allocated to the container ?

Here we try to allocate a byte array of 500MB in a docker container having a total amount of memory of 1GB. This cannot work because, after loading the JVM itself, there is no anymore room in the container to allocate 500MB. However, since the JVM thinks that 4GB are available to the container, it starts to happily allocate the 500MB, when it suddenly notices that its own size exceeds 1GB. The docker container is killed and everything results in a very confusing situation that only a Ctrl-C can still handle.

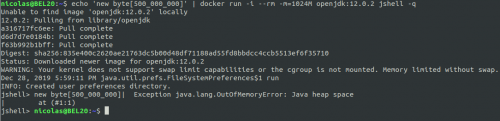

The good news is however that with Java 10 and higher these issues have been solved. For example, here is what happens when running the previous command with Java 12:

As we can see, the java.lang.OutOfMemoryException is raised, as expected. The JVM is able to honour the resource allocation constraints set by the container, as shown below:

This time the JVM correctly honours the 3 cores allocated to its running container as well as the maximum heap memory of 256 MB, a quarter of the 1GB allocated to the docker container. So finally, our story has a happy end :-)